article

Sample

Introduction

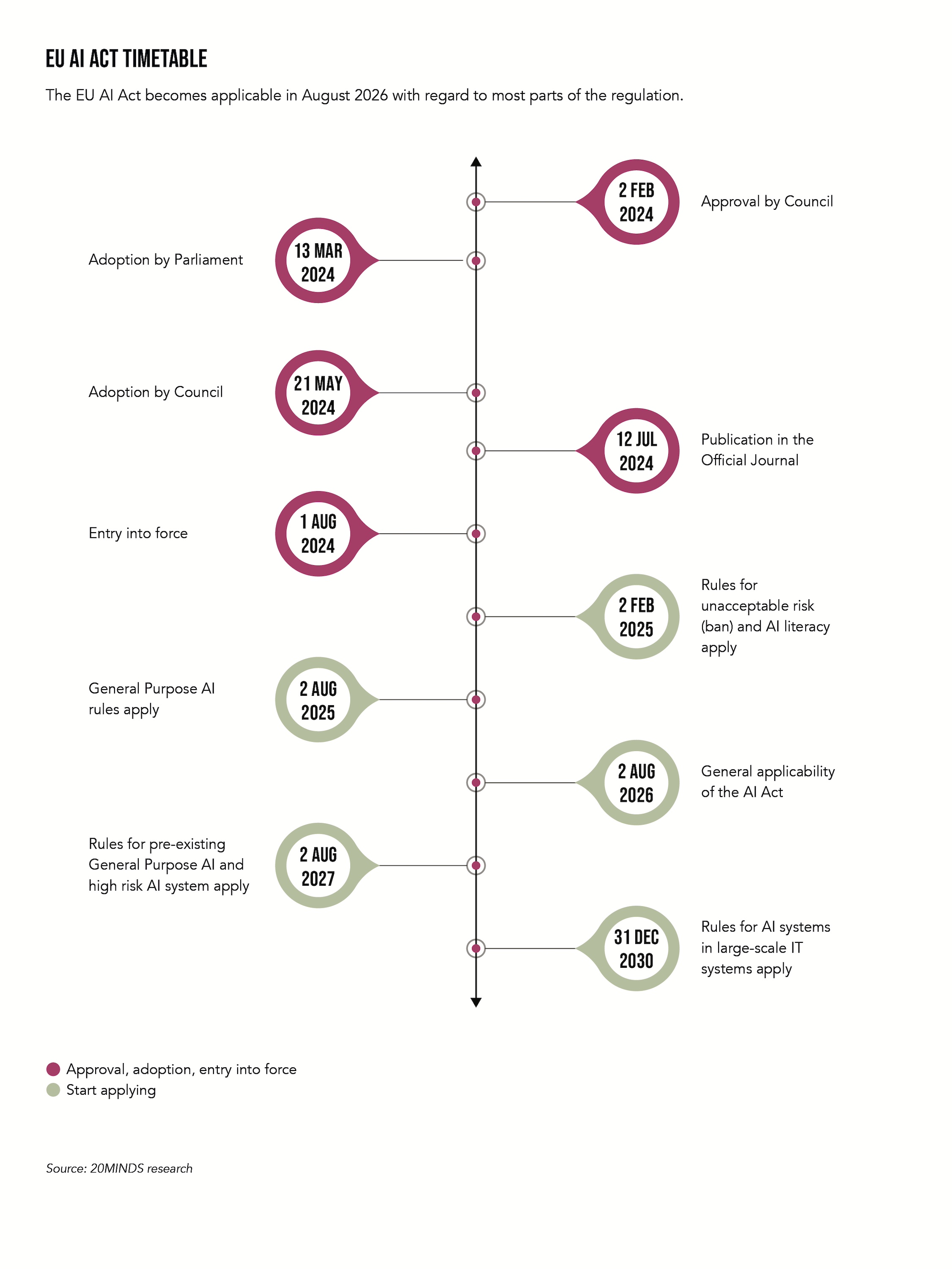

The EU was one of the first jurisdictions proposing regulation focusing on AI and, in March 2024, the European Parliament approved the Artificial Intelligence Act (AI Act), the world's first comprehensive legal framework for the regulation of AI. It entered into force in August 2024 with the first obligations applicable early 2025. The AI Act is expected to influence AI regulation worldwide.

Yet, our understanding of what AI technology really comprises, its actual potential for businesses and consumers, and the risks it poses to society are constantly evolving.

The desire of regulators to regulate the somewhat unpredictable poses unique challenges for businesses as well as investors.

Why AI governance matters: generally, and in the context of M&A

Despite the uncertainty, I believe the best choice for businesses is to be proactive and invest in AI governance now.

Sound AI governance is a key differentiator in an AI-driven world. Effective AI governance can reduce the risk of penalties and damages, enable companies to adapt more quickly to new opportunities and risks arising from the evolution of AI, and, if an exit or trade sale is on the horizon, justify a higher purchase price by building trust and confidence in the target’s regulatory readiness.

In short, an AI Governance Framework should be an integral part of any AI related business strategy. This is true not only for companies in data-intensive industries, but across all sectors.

A unique due diligence challenge: the "blackbox" nature of AI

AI systems, especially those based on reinforcement learning, can be difficult to analyse and evaluate due to their "black box" nature. This becomes more pressing as such systems evolve over time.

The AI systems developed and/or used by a (target) company can live on and may evolve independently of their original training data. Traditional technical review processes may not be able to identify flaws in an AI system, because the historical performance of the AI system cannot be used to confirm that the dataset has not been poisoned over time.

This ‘black box’ nature of AI can lead to a unique set of financial, legal and ethical risks for acquirers of businesses developing or using AI systems. Because of the difficulty in spotting problems or understanding their magnitude, these risks can easily perpetuate post-transaction.

To take a simple example: an employee uses generative AI to draft a memo and inputs confidential company data into the AI system. The data, an important trade secret, falls into the wrong hands because of the employee’s actions.

Without AI involved, this would be a classic data breach scenario, usually subject to contractual remedies as part of the transaction.

To mitigate such risks, transactional due diligence should also cover whether AI systems were trained on high-quality data and to what extent there are risks that the AI systems are prone to producing erroneous, discriminatory or illegal outcomes (Garcia 2023)1.

A comprehensive AI governance framework can give comfort to the buyer that these risks have been appropriately addressed.

AI Governance: Where to start?

If the business relies on AI systems to serve its clients or for key internal processes, or else develops such AI systems, then an AI governance framework becomes a strategic imperative.

The first step should therefore be to categorize all the AI systems developed or used by the business.

In the context of M&A, this categorisation allows buyers to assess the compliance and identify which investments into AI governance will be required after closing.

Depending on market conditions, the seller may then decide to establish an AI governance framework as a pre-deal compliance project or leave it to the buyer to ensure regulatory readiness post-closing.

What an AI Governance Framework should entail

An effective AI Governance Framework should address compliance, ethical, and reputational risks while enabling the business to capitalise on the opportunities presented by new AI applications.

The risks are being mitigated if the framework ensures that AI is safe, secure and resilient, explainable and interpretable, privacy-enhancing, fair, accountable and transparent, as well as valid and reliable (see, e.g., NIST 20232, p12). The opportunities of AI can be harnessed by businesses if the framework is well-targeted, aligned with the risks faced and adaptable to technological and commercial developments.

An AI Governance Framework should include at least the following components:

- AI Compliance Strategy: Sets out the approach to AI in the company generally, and to compliance with existing and upcoming regulations specifically. An effective strategy looks beyond the horizon. For example, a company's use of AI will often require compliance not only with existing AI regulations, e.g., the EU AI Act, but also upcoming AI regulation and existing data protection and competition laws.

- AI Compliance Management System (AI CMS): Helps manage compliance risks, ensuring that AI adheres to regulations and ethical standards, and continuously monitor evolving regulations as well as guidance issued by regulators. Here too, the AI CMS should not only address specific laws, such as the EU AI Act, but establish a common process that is needed to avoid revolving changes at every step.

- AI Governance Body (AIGB): An internal body that consists of cross-functional and interdisciplinary teams which are tasked with implementing and further development of the AI Strategy and the AI CMS.

Implementation of AI governance: key steps

If an AI governance framework is not yet in place and must be implemented ahead of a transaction, it cannot be done on the last mile. It involves several steps:

- Formation of the AIGB: It is critical that the AIGB has clearly defined roles, decision-making processes and accountability. Therefore, if the target's governance body is deeply embedded in the seller's corporate structure, the unbundling or carve-out of the AIGB should begin as early as possible. The governance body also needs sufficiently direct communication channels to the board. In the later stages of a transaction, it may be beneficial to extend these channels to the buyer, if competition law permits.

- Assessment and Planning: Assess AI readiness and establish clear governance goals and objectives. As AI technology may already be used by teams throughout the organisation and might be widely fragmented, it is essential to identify the AI systems early. Clear workstreams with set milestones are key, as many sprints in compliance projects depend on completing preceding tasks.

- Resource Allocation: Secure technology, talent, and budget; consider external advisors for compliance.

- Stakeholder Engagement: Involve key stakeholders from IT, legal, and product teams.

- Allocation of Responsibilities: Clearly define roles and responsibilities; consider appointing a Chief AI Officer (CAIO).

- AI Literacy: Develop AI training to raise awareness of risks and identify compliance gaps.

- Policies and Guidance: Develop comprehensive policies and procedures for the AI lifecycle, including data collection, model development, deployment, and monitoring; incorporate ethical principles.

- Risk Management Strategy: Implement and regularly evaluate risk management strategies; refer to frameworks from the IEEE, the EU, the Montréal Declaration, the AI Governance Alliance (AIGA), and the National Institute of Standards and Technology (NIST).

Importance of custom-tailored AI governance approaches

There is no one-size-fits-all approach to AI governance. AI governance frameworks are highly dependent on the products and services a company offers, how AI technology is used, the structure of the organisation, the applicable regulatory regimes, and much more.

Two points to note here:

- First, not all AI is created equal, at least not from a regulator’s point of view. The EU AI Act, for example, distinguishes between providers and deployers of AI systems. The bulk of the obligations falls on providers. For high-risk AI systems in particular, providers must fulfil far-reaching obligations, including (but not limited to) setting up systems for quality management, post-market monitoring, and technical documentation; providing instructions for use; and undertaking a conformity assessment. Some of the obligations continue to apply post-launch, such as the obligation to conduct post-market monitoring and update the required documentation. Deployers of high-risk AI systems must in particular assign human oversight and monitor the systems, ensure transparency and apply data governance. They are also required to suspend their use after the identification of specific risks and notify the competent authorities in certain cases.

- Second, regulators will focus on different things in different sectors. In advertising, the focus will likely be on fair, unbiased output and data protection compliance. In healthcare, the emphasis will likely be on system reliability, accuracy, and alignment with sector-specific regulations (e.g., the EU Medical Device Regulation (MDR)). In finance, the framework may prioritise compliance with sector-specific regulations (e.g., Operational Resilience Act (DORA)), including adherence to strict data usage and model transparency guidelines, and robust cybersecurity measures.

Conclusion

As AI continues to permeate every industry, the importance of regulatory readiness, in particular in the context of an M&A transaction, cannot be overstated.

The challenges posed by AI-specific and non-AI-specific laws, coupled with potential financial and reputational risks, necessitate a robust AI governance framework. The market will particularly focus on that aspect in upcoming transactions as AI will become more and more regulated.

Only a framework that is tailored to the unique needs and structure of the company will be able to effectively mitigate the risks while harnessing the full potential of AI. As we move forward, the ability to adapt and evolve in response to the dynamic regulatory environment will be a key differentiator for businesses in the AI-driven world.

Christoph Werkmeister is partner and global co-head of the data and technology practice of Freshfields.

Sources

- Garcia, A.C.B., Garcia, M.G.P. & Rigobon, R. Algorithmic discrimination in the credit domain: what do we know about it? AI & Soc 39, 2059–2098 (2024)

- National Institute of Standards and Technology, U.S. Department of Commerce, NIST AI 100-1, “Artificial Intelligence Risk Management Framework (AI RMF 1.0)”, January 2023

- Haan, Katherine, 16 June 2024. 24 Top AI Statistics and Trends in 2024. Forbes Advisor. Retrieved from https://www.forbes.com/advisor/business/ai-statistics